The Future of AI Support Infrastructure Design

As businesses increasingly rely on artificial intelligence to enhance customer support, understanding the design of AI support infrastructure is becoming essential. In today’s digital-first world, the architecture of AI systems directly impacts how efficiently and effectively an organization serves its customers.

For decision-makers—especially VPs, Heads of Support, and IT Managers—investing in scalable, reliable AI infrastructure is not just a modernization effort, but a competitive necessity.

The Evolution of AI Support Infrastructure

AI in customer support has evolved from simple, rule-based systems into intelligent, responsive infrastructures powered by machine learning, NLP, and real-time analytics.

According to McKinsey, over 50% of customer interactions could be automated through AI—highlighting the need for robust infrastructure to manage and optimize these interactions.

The transition from on-premises setups to cloud-native or hybrid architectures is a defining shift. Cloud infrastructure allows organizations to scale more efficiently, deploy faster, and reduce costs—while hybrid models combine flexibility with data security and performance optimization.

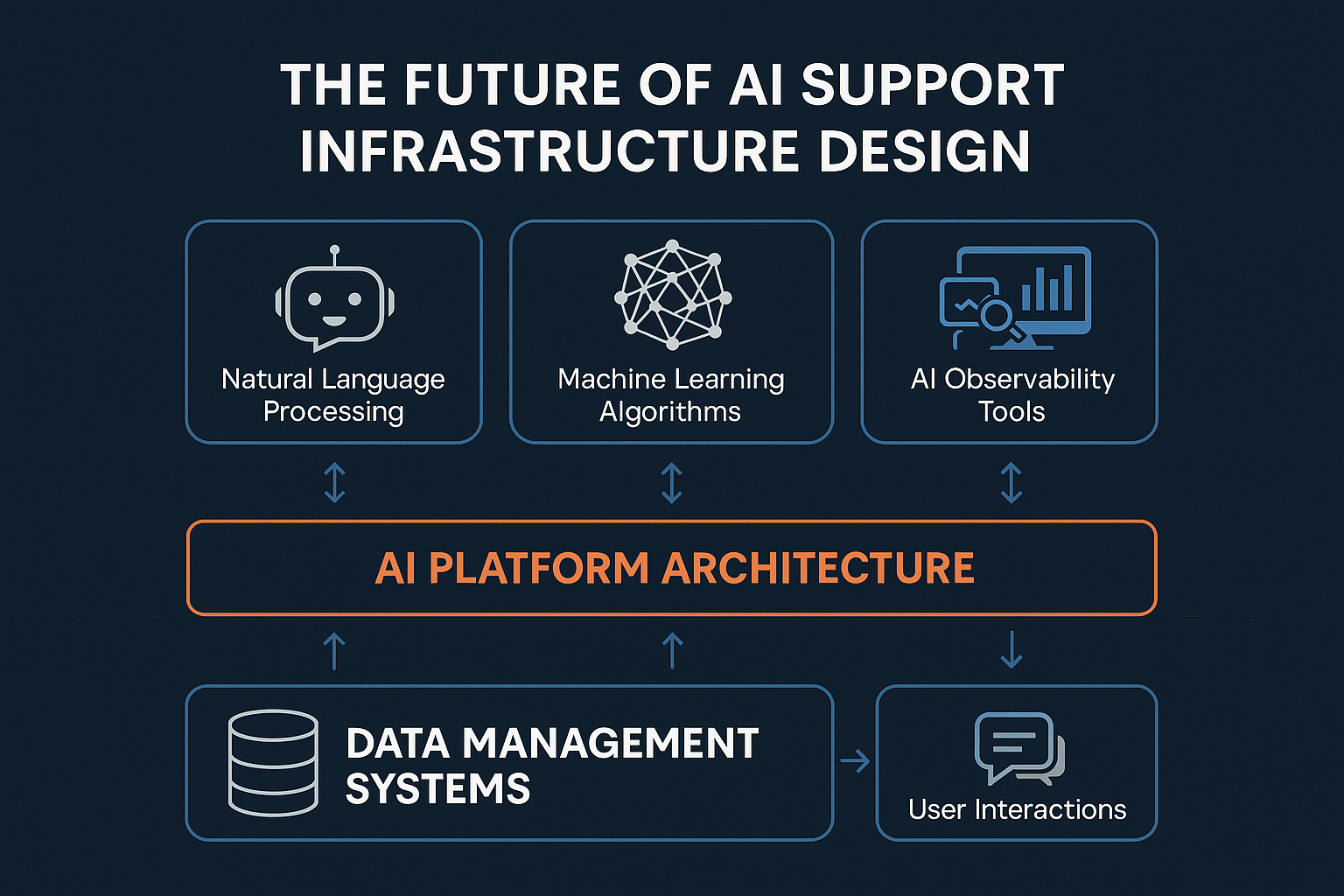

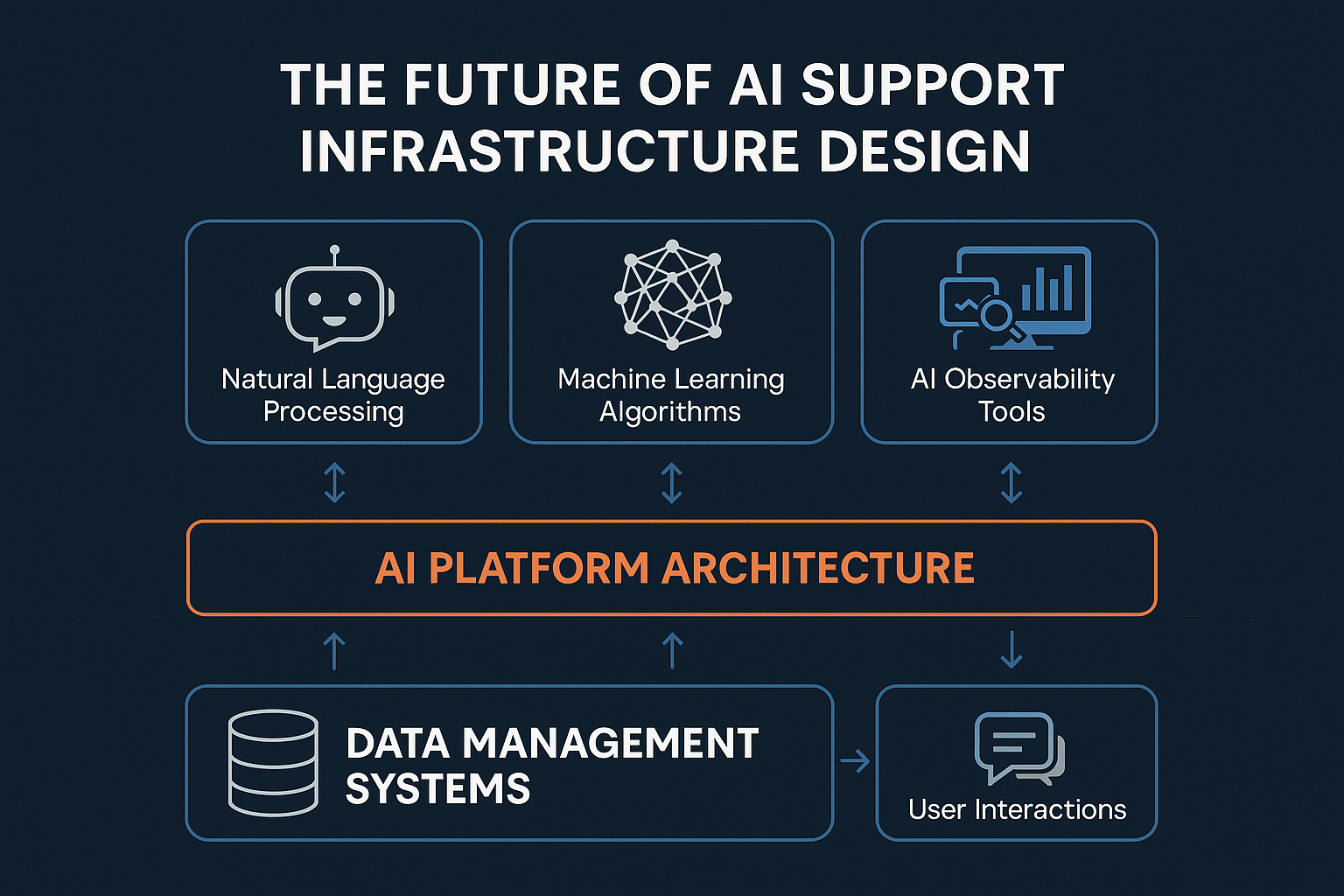

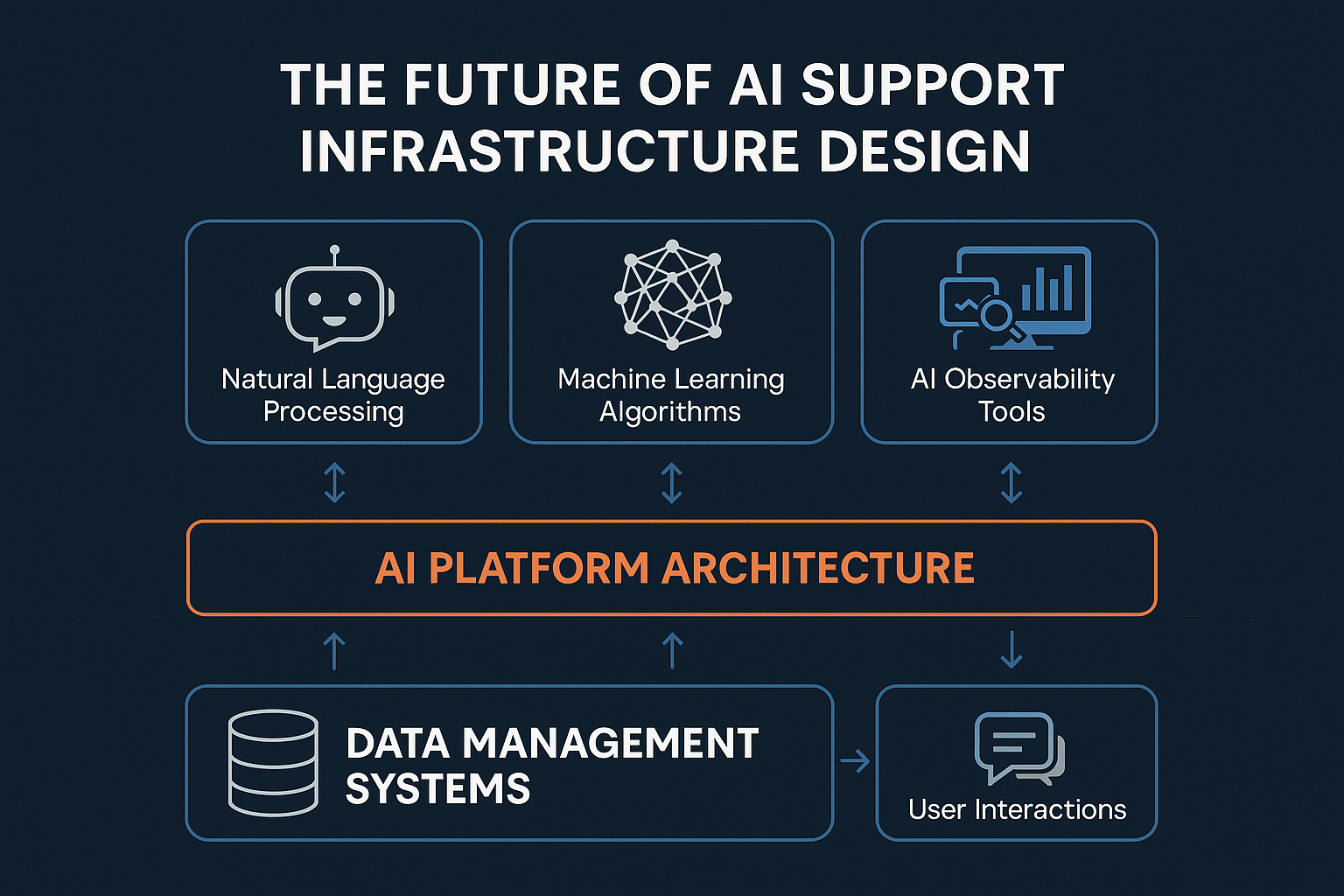

Core Components of a Modern AI Support Stack

Building a resilient and scalable AI support system requires a combination of advanced tools and technologies:

- Natural Language Processing (NLP): Enables machines to interpret and generate human language, forming the basis for intelligent customer interactions.

- Machine Learning Algorithms: Continuously improve response accuracy and personalization by learning from historical and real-time data.

- AI Infrastructure Tools: Frameworks like TensorFlow, PyTorch, and orchestration platforms like Kubernetes allow for scalable deployment and maintenance of AI models.

- Data Management Systems: Technologies such as Apache Kafka, Spark, and Hadoop process massive datasets required to fuel AI accuracy and speed.

- AI Observability Tools: Essential for monitoring, logging, and troubleshooting AI model behavior. They help maintain reliability and compliance at scale.

Challenges in Designing AI Infrastructure

Despite the benefits, designing AI support infrastructure comes with critical challenges:

- Integration Complexity: Retrofitting new AI layers into legacy systems demands strategic architecture and technical coordination.

- Data Privacy & Compliance: As AI collects and processes customer data, ensuring compliance with frameworks like GDPR and HIPAA becomes increasingly complex.

- Dynamic Scalability: Systems must scale automatically without sacrificing performance under fluctuating workloads.

- Cost vs. Performance Balance: Managing computational load while keeping infrastructure costs in check requires ongoing optimization.

Strategies for Scalable AI Support

Implementing a future-ready AI support stack involves deliberate planning and smart technology choices:

- Microservices Architecture: Enables independent scaling and updating of services, minimizing disruption.

- Containerization: Tools like Docker and Kubernetes allow consistent deployment and orchestration across environments.

- Automated Data Pipelines: Tools like Apache Airflow ensure real-time data collection, transformation, and delivery to support accurate insights.

- Edge Computing: Reduces latency and enhances real-time analytics by processing data close to the source.

- Continuous Improvement: Implement feedback loops to monitor performance and iteratively improve AI models and service workflows.

The Role of AI Platform Architecture

AI platform architecture is the foundation of system reliability and flexibility. A strong architecture:

- Encourages modularity, so components can evolve independently.

- Supports open standards for API integrations with CRMs, ERPs, ticketing systems, and analytics tools.

- Enables seamless data flow across systems, ensuring consistent and contextual customer experiences.

For IT managers, prioritizing interoperability and vendor-agnostic platforms simplifies future scaling and integration.

Future Trends in AI Infrastructure Design

Looking ahead, several key trends are shaping the next generation of AI support systems:

- AI Governance & Ethics: Transparent systems and ethical use frameworks will be essential as AI becomes integral to customer decision-making.

- Quantum Computing: Emerging capabilities in quantum may eventually unlock unprecedented speed and scale in AI processing.

- AI-Driven DevOps: Leveraging AI for system health monitoring, automated testing, and issue resolution will improve overall infrastructure resilience.

- Hyper-Automation: The convergence of AI, machine learning, and robotic process automation (RPA) will accelerate complex decision-making and task execution.

Conclusion

In an era where customer experience is a competitive advantage, investing in the right AI support infrastructure is a strategic imperative. Forward-thinking leaders must go beyond tools—they must build systems that scale, adapt, and evolve with the needs of both the customer and the business.

Whether through microservices, edge computing, or AI observability platforms, a modern infrastructure design allows businesses to future-proof their support operations.

Try Twig for free now and discover how a well-architected AI support infrastructure can transform the way you deliver customer service.

.png)